14 Using Google Forms as a Framework to Capture Student-Generated Questions

Michael Guy

Introduction

Student-generated questioning (SGQ) is a collaborative and open sharing of assessment and content creation that contributes to the development of question databanks (

Jhangiani, 2017), and provides opportunities for learners to critically think and reflect on the concepts being learned. Pedagogically it can enhance deeper learning, and provide insight into assessment techniques (Chin & Osborne, 2008). SGQ is flexible in implementation. A particularly good method is described in a blog post by Dr Rajiv Jhangiani of Kwantlen Polytechnic University, British Columbia, Canada. His implementation is as follows:

- Students write 4 questions each week, 2 factual (e.g., a definition or evidence-based prediction) and 2 applied (e.g., scenario-type).

- In the first two weeks, students wrote one plausible distractor. The question stem, the correct answer, and 2 plausible distractors are provided. Learners peer-reviewed questions written by 3 randomly assigned peers. The entire procedure is double-blinded and performed using Google forms for the submission and Google sheets for the peer review.

- In the next two weeks, students write two plausible distractors and followed the rest of the procedure.

- In the next two weeks, students write all 3 plausible distractors following the rest of the procedure.

- In the remainder of the semester, they write the stem, the correct answer, and all the distractors.

(Jhangiani, 2017)

William (Will) Thurber, an Associate Teaching Professor in the Faculty of Business and IT at Ontario Tech University implemented it in a first-year Critical Thinking and Ethics class and a third-year Marketing Communications class. Professor Thurber implements SGQ in a similar fashion to Dr Jhangiani with some notable differences.

- Each week students are required to choose 1 of 3 readings to develop questions.

- In the first 3 weeks, students create distractors for 3 questions from a small pool of questions.

- In the fourth and subsequent weeks, a link to a Google form is provided for each reading. Students are required to choose 1 reading and create 3 questions, including correct answers and distractors.

- Students receive a grade by uploading an email receipt of their submitted questions.

- Each week students take a multiple-choice quiz consisting of 10 questions from a question pool. 50% of the pool questions are contributed by professor Thurber and 50% are student-generated questions. Each quiz consists of 50% of the previous week’s pool.

Student-generated questions can be used in group assignments to further collaboration and improve quality.

Resources

- A Google account

- Use of a learning management system (optional)

- Use of an item analysis tool (optional)

Steps for Implementation

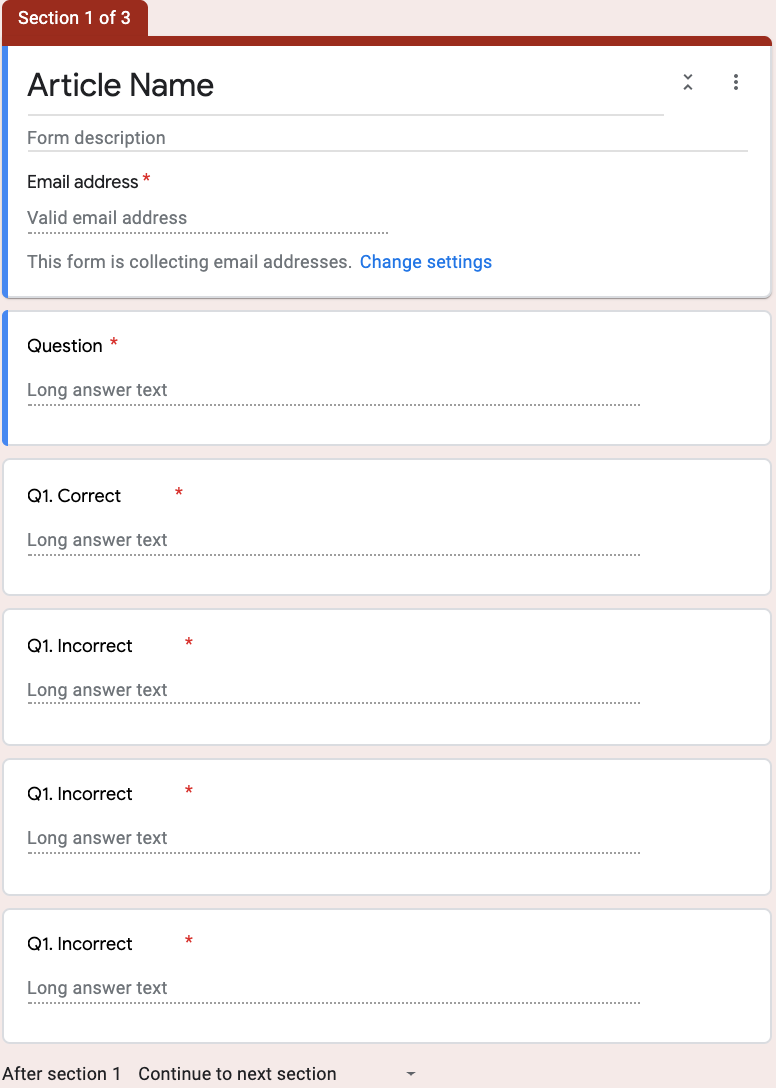

Step 1 – Create a Google Form

- Enter the name of the assignment (Replace “Article Name” title)

- Optionally provide a description

- Create a paragraph question type to store the student-generated question stem.

- Create several more paragraph types to store the correct answer and the number of distractors you wish the students to create.

- Repeat the process to match the number of questions you wish the students to generate.

The correct answer should always be placed as the first choice for consistency. A script can be installed to randomise the correct answers or through the learning management system.

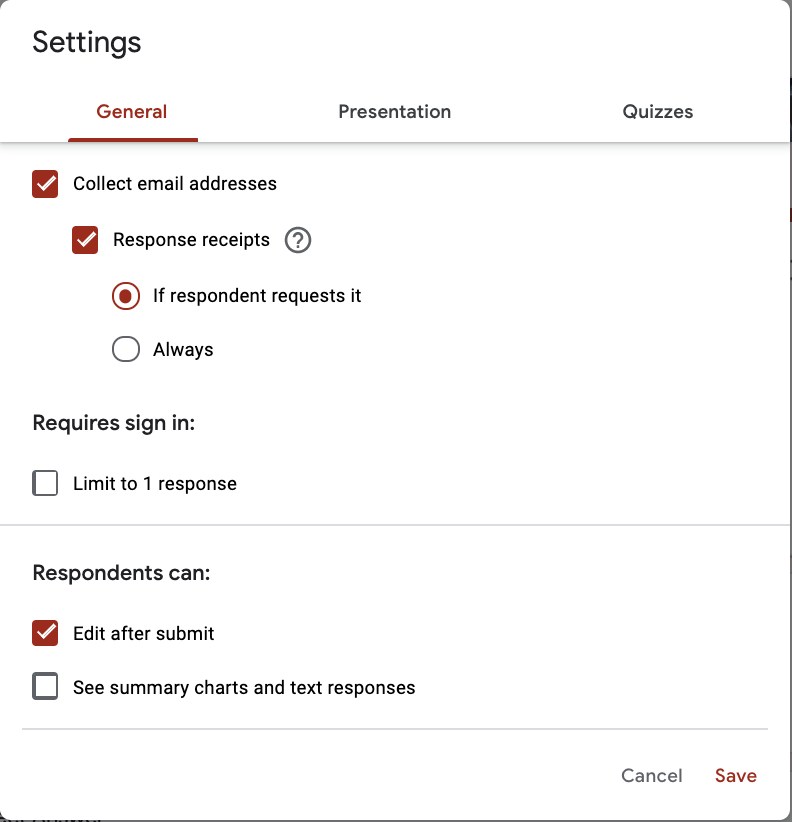

Step 2 – Form Settings

- Choose to collect email addresses if you wish to grade or confirm completion.

- Checking ‘Response receipts’ sends a copy of their submission to their email address.

- If your institution has a GSuite account you can restrict to those members.

- You can choose to limit to 1 response or leave it open to many submissions.

- If peer review is part of your implementation you can allow students to edit their submission after they have received feedback.

Top Tips for Success

- Actively participate with students.

-

- Encourage discussion and reflection as part of the process.

- Generate question stems

- Select the best items and add to a question pool of your own questions.

-

- Gradual introduction.

-

- Start with a single distractor and continue in a staggered fashion until learners are ready to create their own question.

-

- Provide guidelines for writing multiple-choice questions.

-

- Introduce the mechanics of writing multiple-choice questions.

- Introduce Bloom’s Taxonomy and encourage higher-order questions as they become more comfortable.

-

- Item Analysis

-

- Use an item analysis tool to measure the effectiveness of the questions and determine if any should be re-worked.

-

- Reflection

-

- Survey students and reflect on feedback

-

Further Reading

Aflalo, E. (2018). Students generating questions as a way of learning. Active Learning in Higher Education. https://doi.org/10.1177/1469787418769120

Understanding Item Analyses. (n.d.). Retrieved from https://www.washington.edu/assessment/scanning-scoring/scoring/reports/item-analysis

Pittenger, A. L., & Lounsbery, J. L. (2011). Student-generated questions to assess learning in an online orientation to pharmacy course. American journal of pharmaceutical education,

75(5), 94. doi:10.5688/ajpe75594

Heer, Rex. (n.d). A Model of Learning Objectives–based on A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives. (n.d.). [pdf], Center for Excellence in Learning and Teaching, Iowa State University. Available at: http://www.celt.iastate.edu/wp-content/uploads/2015/09/RevisedBloomsHandout-1.pdf [Accessed 10 Dec. 2019].

Digital resources

Student-Generated Questions Sample Form

References

Chin, C., & Osborne, J. (2008). Students questions: a potential resource for teaching and learning science. Studies in Science Education, 44(1), 1–39. doi: 10.1080/03057260701828101

Jhangiani, R. (2017, January 13). Why have students answer questions when they can write them? Retrieved November 26, 2019, from https://thatpsychprof.com/why-have-students-answer-questions-when-they-can-write-them/

Author

Mick Guy is Educational Technology (EdTech.) Analyst in the Teaching and Learning Centre at Ontario Tech University. His role involves working with Faculty Development Officers to develop solutions and support faculty with complex pedagogical uses of technology. He works closely with I.T. and educational partners to ensure technological initiatives are met. He also develops applications and educational solutions using open source products and application programming interfaces (APIs).

Mick Guy is Educational Technology (EdTech.) Analyst in the Teaching and Learning Centre at Ontario Tech University. His role involves working with Faculty Development Officers to develop solutions and support faculty with complex pedagogical uses of technology. He works closely with I.T. and educational partners to ensure technological initiatives are met. He also develops applications and educational solutions using open source products and application programming interfaces (APIs).

He previously held a variety of roles on I.T. projects at financial institutions and in commercial real estate, among others. In 2011 he completed a certificate program in Adult Education which facilitated the move into the EdTech field, joining Ontario Tech University in 2012. His interests revolve around the use of learning analytics to help improve the learner experience.